EU Research Project

Scientific contact

Max Planck Institute for Biological Cybernetics

heinrich.buelthoff[at]tuebingen.mpg.de

heinrich.buelthoff[at]tuebingen.mpg.de

Newsletters

Newsletter #1, September 2011

Newsletter #1, September 2011

Newsletter #2, March 2012

Newsletter #2, March 2012

Newsletter #3, November 2012

Newsletter #3, November 2012

Newsletter #4, August 2013

Newsletter #4, August 2013

Newsletter #5, August 2014

Newsletter #5, August 2014

Newsletter #6, March 2015

Newsletter #6, March 2015

Videos

Project Day demonstrations

| DLR Flying Helicopter Simulator | DLR steering wheel control | ||

| Watch the video  here here |  | Watch the video  here here |

| MPI haptic simulator | UoL PAV desktop simulation | ||

| Watch the video  here here |  | Watch the video  here here |

| ETHZ vision-based navigation | EPFL-CVLAB landing place assessment | ||

| Watch the video  here here |  | Watch the video  here here |

| EPFL-LIS collision avoidance swarm demo | KIT World Café | ||

| Watch the video  here here |  | Watch the video  here here |

Steering wheel control

We have developed a generic dynamics model that represents PAV-like flight behaviour. It can be rapidly configured to represent vehicle response types that confer different levels of handling qualities upon the simulated PAV. The dynamic model includes sophisticated vehicle response types that are suited to certain types of tasks in certain parts of the flight envelope. Therefore, a hybrid response type has been developed that automatically provides the optimum response type to the pilot depending on the flight condition.

We have developed a generic dynamics model that represents PAV-like flight behaviour. It can be rapidly configured to represent vehicle response types that confer different levels of handling qualities upon the simulated PAV. The dynamic model includes sophisticated vehicle response types that are suited to certain types of tasks in certain parts of the flight envelope. Therefore, a hybrid response type has been developed that automatically provides the optimum response type to the pilot depending on the flight condition.

The video can be watched

here.

here.Simulated PAV flight

We have developed a generic dynamics model that represents PAV-like flight behaviour. It can be rapidly configured to represent vehicle response types that confer different levels of handling qualities upon the simulated PAV. The dynamic model includes sophisticated vehicle response types that are suited to certain types of tasks in certain parts of the flight envelope. Therefore, a hybrid response type has been developed that automatically provides the optimum response type to the pilot depending on the flight condition.

We have developed a generic dynamics model that represents PAV-like flight behaviour. It can be rapidly configured to represent vehicle response types that confer different levels of handling qualities upon the simulated PAV. The dynamic model includes sophisticated vehicle response types that are suited to certain types of tasks in certain parts of the flight envelope. Therefore, a hybrid response type has been developed that automatically provides the optimum response type to the pilot depending on the flight condition.

The video can be watched

here.

here.Swarming and flocking of PAVs

Collision avoidance is paramount in the high-density environments in which many personal aerial vehicles operate simultaneously. Within myCopter, we are taking inspiration from nature and are developing swarming and flocking algorithms for PAVs based on animal swarms and human crowd behaviour. We have developed simulation software that can simulate realistic vehicle behaviour and that can be operated in real-time or in an accelerated mode. Collision avoidance strategies are implemented in this simulation software to analyse detailed vehicle behaviour.

Collision avoidance is paramount in the high-density environments in which many personal aerial vehicles operate simultaneously. Within myCopter, we are taking inspiration from nature and are developing swarming and flocking algorithms for PAVs based on animal swarms and human crowd behaviour. We have developed simulation software that can simulate realistic vehicle behaviour and that can be operated in real-time or in an accelerated mode. Collision avoidance strategies are implemented in this simulation software to analyse detailed vehicle behaviour.

The video can be watched

here.

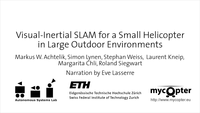

here.Parallel tracking and mapping

We have implemented a high-performance parallel tracking and mapping system to achieve monocular self-localisation and mapping on-board a small aerial vehicle. Due to the long trajectories that are flown, the high dynamics of the motion of the vehicle, and dynamic objects in the scene, we use additional sensors including an Inertial Measurement Unit featuring accelerometers and gyroscopes, a magnetometer, air-pressure data, and GPS information where available.

We have implemented a high-performance parallel tracking and mapping system to achieve monocular self-localisation and mapping on-board a small aerial vehicle. Due to the long trajectories that are flown, the high dynamics of the motion of the vehicle, and dynamic objects in the scene, we use additional sensors including an Inertial Measurement Unit featuring accelerometers and gyroscopes, a magnetometer, air-pressure data, and GPS information where available.

The video of this system was presented at the IEEE/RSJ International Conference on Intelligent Robots and Systems 2012. The framework that we have developed is publically available at

ethzasl_sensor_fusion, and asctec_mav_framework have been developed partially in the myCopter project.

ethzasl_sensor_fusion, and asctec_mav_framework have been developed partially in the myCopter project.

The video can be watched

here.

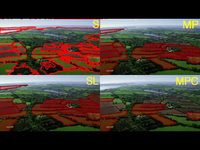

here.Real-time landing place assessment

Currently, automatic landing systems heavily rely on beacons and systems on the ground to determine the path towards a landing spot. In the myCopter project we are developing vision algorithms that can perform robust and real-time automated landing place assessment in man-made environments. With our approach, landing sites are assessed as featureless, but regularly shaped, areas in the image that typically characterise man-made landing structures. Examples include prepared landing surfaces such as runways and landing pads, as well as unprepared ones, including grass fields, dirt strips and building rooftops. This video shows a novel image segmentation and shape regularity measure that we have developed.

Currently, automatic landing systems heavily rely on beacons and systems on the ground to determine the path towards a landing spot. In the myCopter project we are developing vision algorithms that can perform robust and real-time automated landing place assessment in man-made environments. With our approach, landing sites are assessed as featureless, but regularly shaped, areas in the image that typically characterise man-made landing structures. Examples include prepared landing surfaces such as runways and landing pads, as well as unprepared ones, including grass fields, dirt strips and building rooftops. This video shows a novel image segmentation and shape regularity measure that we have developed.

The video can be watched

here.

here.